|

作者:Andy Savage 在接下来几周时间里,我将发布一些关于游戏玩法优化和用户转换的分析例子。我将使用一个现实游戏作为案例,即《Ancient Blocks》,你能在App Store中找到这款游戏。  acient blocks(from gamedev)

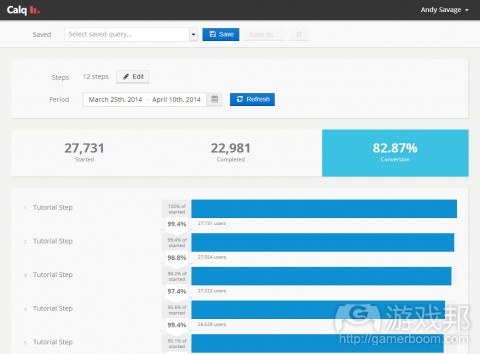

本文的报告是使用Calq,你也可以使用其它服务或自己创造这些参数。这系列文章是致力于分析“测量什么内容”而不是“如何进行测量”。 常见的关键业绩指标(KPI) 不管是哪种类型,所有手机游戏的高级KPI都是相类似的。大多数开发者的KPI将包含: 第一天,第七天以及第三十天的用户留存—-玩家回到游戏中的频率。 DAU,WAU,MAU—-每日,每周和每月的活跃用户,关于活跃玩家基础的测量 用户LTV—-玩家的终身价值(游戏邦注:通常情况下是基于各种龄组,性别,位置,用户获取广告活动等等元素所决定)。 DARPU—-每用户每日平均收益,也就是每个活跃用户每天所创造的收益。 ARPPU—-每付费用户平均收益,对于LTV的测量,但它只计算部分用户所花费的钱。 同时也存在一些特定的KPI。这将帮助你着眼于游戏中一些独立的部分,从而更好地完善它们。你的最终目标将是通过完善更多的游戏子集而更好地完善高级KPI。 用户留存 用户留存是指测量玩家在一段时间后回到你的游戏中的频率。第一天的用户留存是指隔天有多少玩家回到游戏中,第七天的用户留存意味着七天后回到游戏中的玩家。用户留存是衡量你的游戏粘性的重要指标。 衡量用户留存比衡量收益还要重要。如果你的用户终身价值不是很突出,但却拥有很棒的用户留存,那么你便可以在之后做出进一步的完善。但反过来的话情况可能就不是那么乐观了。基于较低用户留存的应用很难获得盈利。 当游戏在进行迭代时(游戏邦注:添加/删除某些功能,或调整现有的功能),用户留存便能够用于检查这些改变是否具有积极影响。 活跃的用户基础 你可能听说过“每日/每周/每月”活跃用户。这些是呈现出你的活跃用户基础规模的产业标准。例如WAU是指玩了7天游戏的玩家数量。使用DAU/WAU/MAU测量能够帮助你轻松地衡量用户是处于发展,收缩还是平稳状态。 活跃用户测量需要与用户留存数据一起进行分析。如果你拥有许多新用户,但却失去了同样比例的现有用户,那么你的用户基础便是基于平稳状态。 游戏特定的KPI 除了常见的KPI,每一款游戏还带有特定的额外参数。这可能包含了游戏中玩家的进程(如关卡),游戏机制,平衡参数,病毒性传播以及分享循环等等数据。 你同样也需要衡量大多数用户旅程(用户在游戏中与不同路径的互动,如开始新游戏的菜单),如此你才能够去优化它们。 就像在《Ancient Blocks》中,游戏的特定参数包括: 玩家进程: 完成了哪些关卡。 玩家是否重玩了一个更复杂的难度。 关卡难度: 玩家尝试了几次才完成一个关卡。 玩家在一个关卡中花了多少时间。 玩家在完成一个关卡前使用了多少道具。 游戏内部货币: 用户什么时候花费游戏内部货币? 他们使用游戏内部货币购买什么? 玩家在购买前通常会做些什么? 游戏内部教程 当玩家第一次开始游戏时,他们通常会看到一个教授新玩家如何游戏的互动教程。这通常是玩家对于游戏的第一印象,所以你需要好好完善它。糟糕的教程会破坏你的第一天用户留存。 《Ancient Blocks》拥有一个10个步骤的教程去教会玩家如何游戏(垂直拖动砖块直至它们能够对齐)。 目标 关于教程的数据收集需要呈现任何需要完善的领域。通常情况下这些领域都是玩家受困,或花费较长时间的地方。 识别教程中任何阻塞点(即玩家受困的地方)。 迭代这些教程步骤以完善转换率(玩家成功走到游戏最后的比例)。 参数 为了完善教程,你需要定义一套针对于教程的参数。关于《Ancient Blocks》,我们需要的关键参数是: 通过每个教程步骤的玩家比例。 真正完成教程的玩家比例。 玩家花费在每个步骤的时间。 在完成教程后继续游戏的玩家比例。 执行  calq(from gamedev)

追踪教程步骤便是直接使用基于行动的分析平台—-就像我们使用了Calq。《Ancient Blocks》使用了一个名为“教程步骤”的行动。这一行动包含了名为“步骤”的特定属性,以说明玩家处于哪个教程步骤中(0指代第一个步骤)。我们同样也想要追踪用户在每个步骤所花费的时间。为了做到这点我们还添加了名为“持续时间”的属性。 行动 教程步骤 属性 步骤—-当前的教程步骤(从0开始,1,2,3依此类推)。 持续时间—-玩家完成步骤的时间(以秒为单位)。 分析 分析教程数据其实很简单。我们可以通过创造一个简单的转换漏斗而获得大部分参数,即漏斗的每一步就等于教程的每一个阶段。 完整的漏斗能够一步步地呈现出整个教程的转换率。在此我们可以轻松地看到哪个步骤“流失”掉最多用户。 正如你所看到的结果:比起其它步骤的99%转换率,第四步的转换率大约是97%。所以这预示着你需要完善这一步。尽管只有1个百分点的差别,但这可能意味着你每个月在这一步骤上会损失1千美元的收益。如果是一款更受欢迎的游戏,差别将会更大。 (本文为游戏邦/gamerboom.com编译,拒绝任何不保留版权的转功,如需转载请联系:游戏邦) Standard Gameplay and IAP Metrics for Mobile Games By Andy Savage Over the next few weeks I am publishing some example analytics for optimising gameplay and customer conversion. I will be using a real world example game, “Ancient Blocks”, which is actually available on the App Store if you want to see the game in full. The reports in this article were produced using Calq, but you could use an alternative service or build these metrics in-house. This series is designed to be “What to measure” rather than “How to measure it”. Attached Image: GameStrip.jpg Common KPIs The high-level key performance indicators (KPIs) are typically similar across all mobile games, regardless of genre. Most developers will have KPIs that include: D1, D7, D30 retention – how often players are coming back. DAU, WAU, MAU – daily, weekly and monthly active users, a measurement of the active playerbase. User LTVs – what is the lifetime value of a player (typically measured over various cohorts, gender, location, acquiring ad campaign etc). DARPU – daily average revenue per user, i.e. the amount of revenue generated per active player per day. ARPPU – average revenue per paying user, a related measurement to LTV but it only counts the subset of users that are actually paying. There will also be game specific KPIs. These will give insight on isolated parts of the game so that they can be improved. The ultimate goal is improving the high-level KPIs by improving as many sub-game areas as possible. Retention Retention is a measure of how often players are coming back to your game after a period. D1 (day 1) retention is how many players returned to play the next day, D7 means 7 days later etc. Retention is a critical indicator of how sticky your game is. Arguably it’s more important to measure retention than it is to measure revenue. If you have great retention but poor user life-time values (LTV) then you can normally refine and improve the latter. The opposite is not true. It’s much harder to monetise an application with low retention rates. A retention grid is a good way to visualize game retention over a period When the game is iterated upon (either by adding/removing features, or adjusting existing ones) the retention can be checked to see if the changes had a positive impact. Active user base You may have already head of “Daily/Weekly/Monthly” Active Users. These are industry standard measurements showing the size of your active user base. WAU for example, is a count of the unique players that have played in the last 7 days. Using DAU/WAU/MAU measurements is an easy way to spot if your audience is growing, shrinking, or flat. Active user measurements need to be analysed along side retention data. Your userbase could be flat if you have lots of new users but are losing existing users (known as “churn”) at the same rate. Game-specific KPIs In addition to the common KPIs each game will have additional metrics which are specific to the product in question. This could include data on player progression through the game (such as levels), game mechanics and balance metrics, viral and sharing loops etc. Most user journeys (paths of interaction that a user can take in your application, such as a menu to start a new game) will also be measured so they can be iterated on and optimised. For Ancient Blocks game specific metrics include: Player progression: Which levels are being completed. Whether players are replaying on a harder difficulty. Level difficulty: How many attempts does it takes to finish a level. How much time is spent within a level. How many power ups does a player use before completing a level. In game currency: When does a user spend in game currency? What do they spend it on? What does a player normally do before they make a puchase? In-game tutorial When a player starts a game for the first time, it is typical for them to be shown an interative tutorial that teaches new players how to play. This is often the first impression a user gets of your game and as a result it needs to be extremely well-refined. With a bad tutorial your D1 retention will be poor. Ancient Blocks has a simple 10 step tutorial that shows the user how to play (by dragging blocks vertically until they are aligned). Goals The data collected about the tutorial needs to show any areas which could be improved. Typically these are areas where users are getting stuck, or taking too long. Identify any sticking points within the tutorial (points where users get stuck). Iteratively these tutorial steps to improve conversion rate (the percentage that get to the end successfully). Metrics In order to improve the tutorial a set of tutorial-specific metrics should be defined. For Ancient Blocks the key metrics we need are: The percentages of players that make it through each tutorial step. The percentage of players that actually finish the tutorial. The amount of time spent on each step. The percentage of players that go on to play the level after the tutorial. Implementation Tracking tutorial steps is straight-forward using an action-based analytics platform – in our case, Calq. Ancient Blocks uses a single action called Tutorial Step. This action includes a custom attribute called Step to indicate which tutorial step the user is on (0 indicates the first step). We also want to track how long a user spend on each step (in seconds). To do this we also include a property called Duration. Action Properties Tutorial Step Step – The current tutorial step (0 for start, 1, 2, 3 … etc). Duration – The duration (in seconds) the user took to complete the step. Analysis Analysing the tutorial data is reasonably easy. Most of the metrics can be found by creating a simple conversion funnel, with one funnel step for each tutorial stage. The completed funnel query shows the conversion rate of the entire tutorial on a step by step basis. From here it is very easy to see which steps “lose” the most users. Attached Image: GameExample-Tutorial-Funnel2.png As you can see from the results: step 4 has a conversion rate of around 97% compared to 99% for the other steps. This step would be a good candidate to improve. Even though it’s only a 1 percentage point difference, that still means around $1k in lost revenue just on that step. Per month! For a popular game the different would be much larger. Part 2 continues next week, looking at metrics on game balance and player progression.(source:gamedev)

|